A Concise History of Data in the AEC Industry: Part 3

Introduction

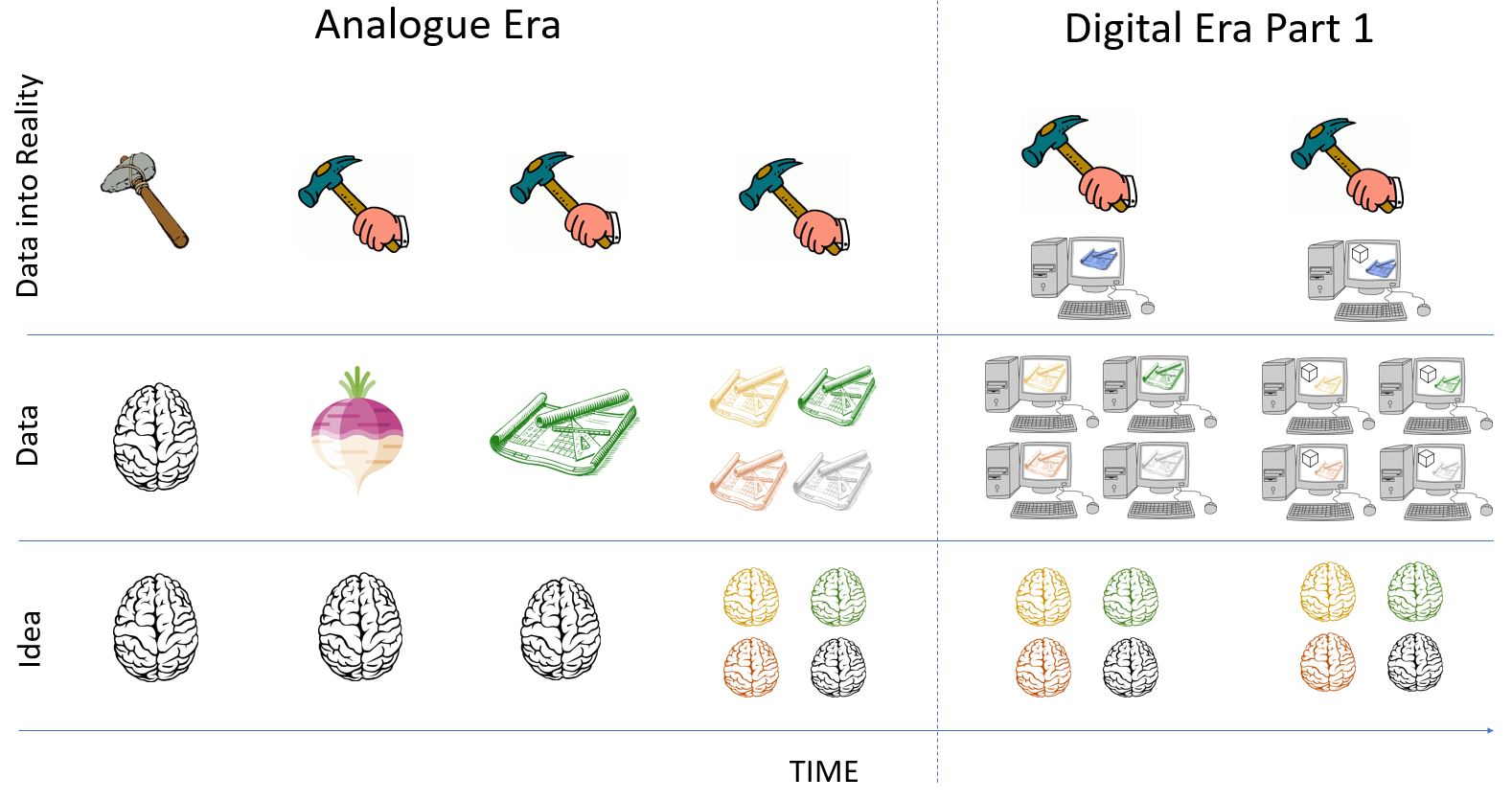

In Part 2, we introduced the second era in the history of AEC Data. This period spanned from the 90’s into the 2000’s. We left off here:

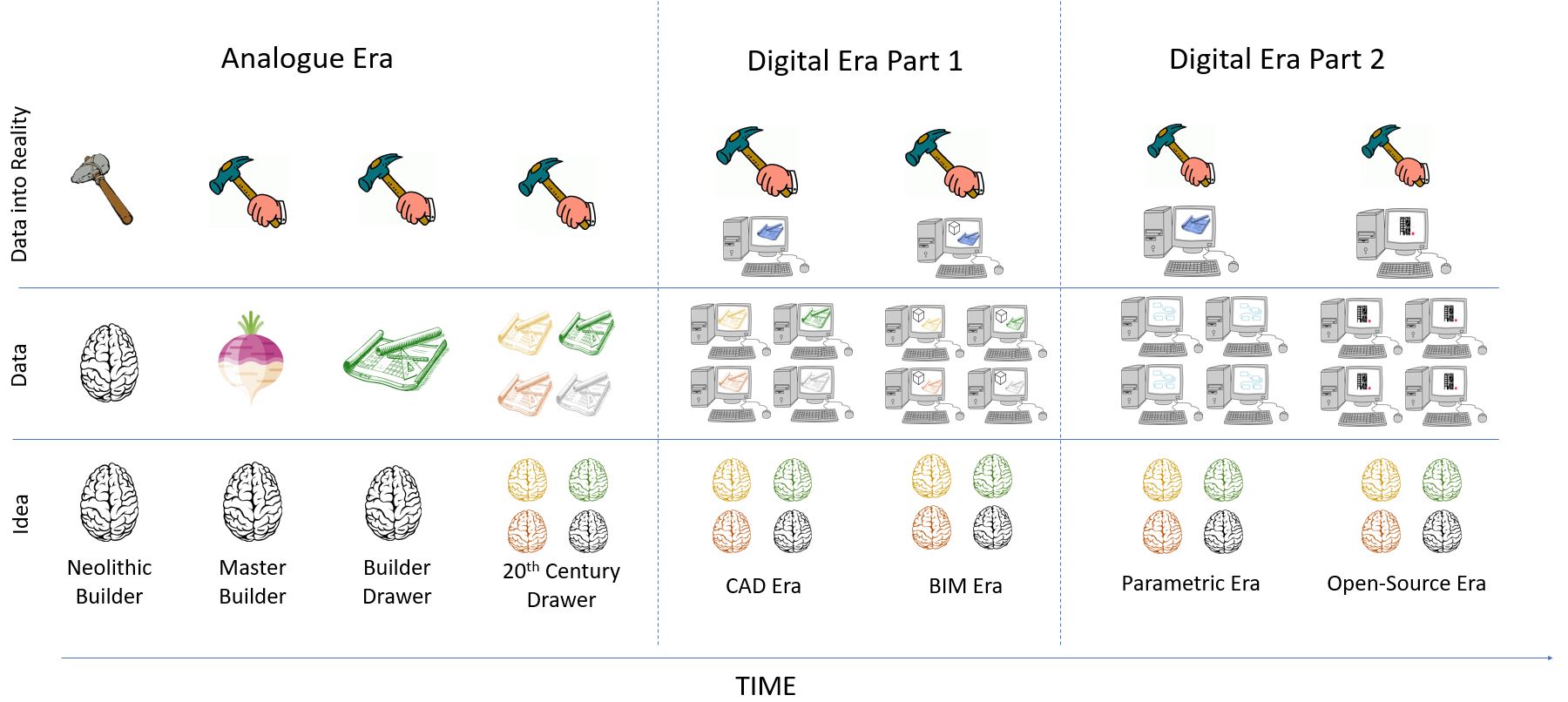

Recall the three predominant era’s of data in the design of the built environment are:

- Analogue Era (100,000BCE – 1990’s)

- Digital Era Part 1 (1990’s – 2000’s)

- Digital Era Part 2 (2000’s – Present)

At the end of the first Digital Era, the data used to create the built environment was created as a collaboration between a software company and an industry practitioner. In this era, the former took responsibility for the palette of digital objects by which the latter could communicate his design ideas. In short, this era was dominated by the use of off-the-shelf software which increased the speed at which data could be created and therefore, the amount of data started to soar with each of the ever increasing number of parties adopting industry standard software.

In this Part 3 of this discussion, we will introduce the Digital Era Part 2 – the era you and I are living in as of the time of this writing! This era can be divided into two predominant parts:

- The Building Modeler 2.0: Define the Underlying Rules of the Thing

- The Building Data Guru: Mass participation in Coding

The key difference between the first digital era and the second is simple – who takes responsibility for the digital definitions of the objects which are ultimately going to be created? What does it mean to take responsibility for the digital definitions of objects? In short, it means who took the time to code the definition of that object (a line, a beam, a finish, a facade, etc.) into the machine? Whilst the previous era was all about adopting software that someone else had coded for you, this era is all about you coding.

The Building Modeler 2.0: Define the Underlying Rules of the Thing

In the book Builders of the Vision, one can get a very good sense that the first dabbles of our industry into coding started in the A of AEC – architects. Namely, Frank Gehry and the Gehry technology group who resorted to using bespoke code to deliver projects such as the Bilbao Museum or the Abu Dhabi Guggenheim Museum. One of the key changes that happened off the back of these projects was that the traditional skills of an architect needed to be augmented with a new literacy in computation. Therefore, architects were still doing what they always did, making physical models which involved:

“…. messy work of cutting, gluing, soldering, seeing, bending, wrinkling, and testing that designers perform iteratively until an acceptable material and spatial arrangement is achieved.”

Builders of the Vision, Chapter 6

However, because of the complexity of the design which was being realized using traditional methods, the delivery would be near impossible without these architects taking responsibility for the digital communication of the design which had been created from this process. Therefore, the industry started to see people with expertise in:

“…three-dimensional scanning, geometric processing, coding, modeling, rationalizing, consulting, testing, and structural problem-solving performed by computationally savvy teams”

Builders of the Vision, Chapter 6

Whilst this trend started at the bleeding edges of the industry in specialist groups of elite architects, such as Gehry Technologies, this trend was accelerated with the release of Grasshopper3D as a free plugin to Rhinoceros in 2007. Grasshopper3D (known as Grasshopper) had a profound impact on this overlap between computation and architectural design because of one predominant reason – it lowered the bar of coding. The software was the first which was tailored to be empathetic to both our industry’s need for coding and the industry’s lack of focus on coding as part of our education. The way Grasshopper did this was by utilizing visual programming as opposed to text-based programming, attached to the already familiar CAD environment of Rhinoceros.

Grasshopper was not the only tool on the block which would allow architects to engage in coding, however, because the programming language was easy to learn and easy to debug live, the bar to entry was significantly reduced and encouraged an explosion in participation in the architectural realm. The key behind this lowering of the bar is summed up brilliantly by Bret Victor in his presentation entitled “Inventing on Principle” which I highly recommend if you want a high level introduction to the topic of visual programming and its effect on how ideas come to life:

Of course, architects have traditionally been the first to the table for any project and therefore, have the effect of driving the industry in many regards. Architects empowered by computation could now deliver projects which engineers and contractors needed to design and build. This meant the other parties downstream of the architects would soon adopt a similar coding skillset to keep up.

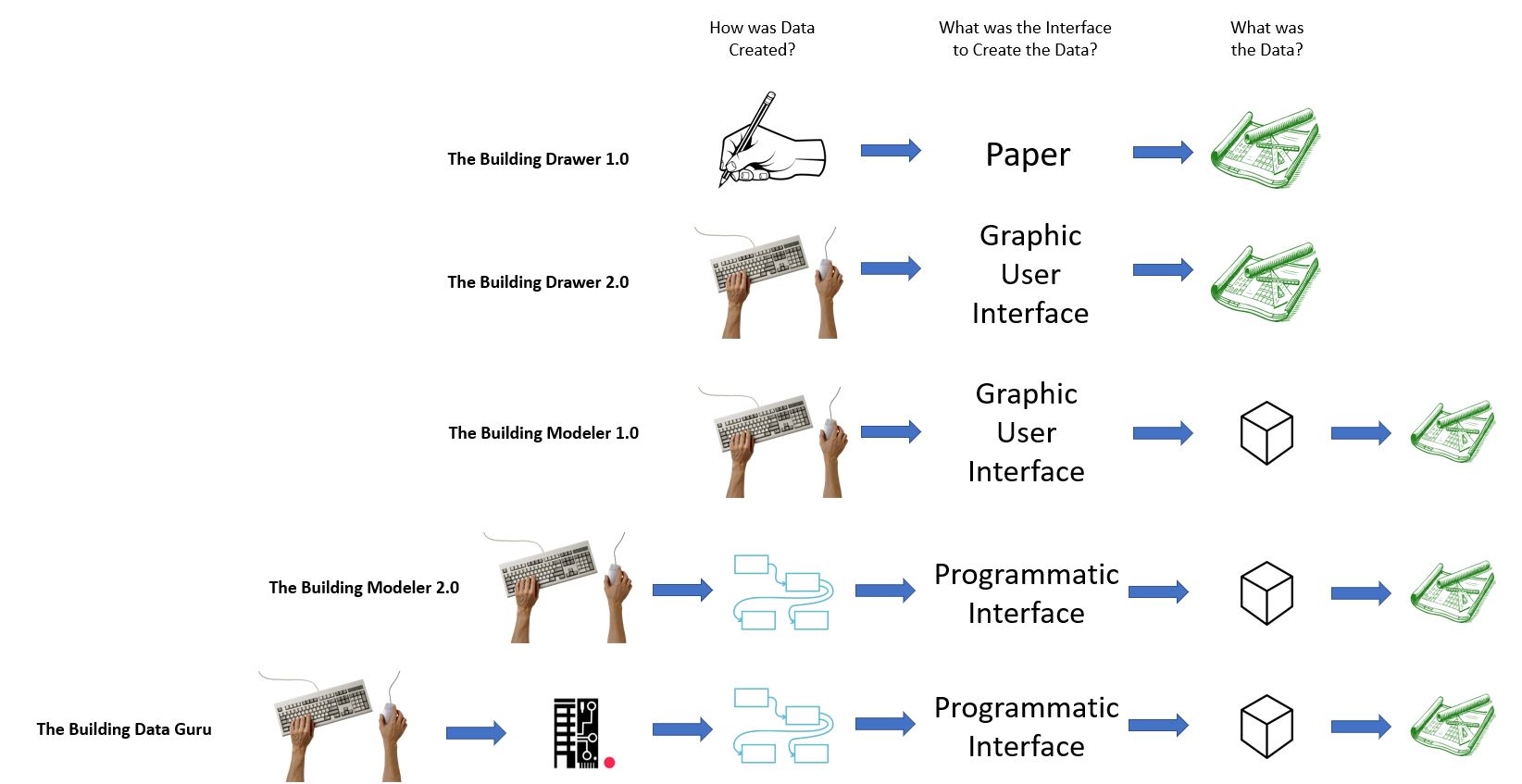

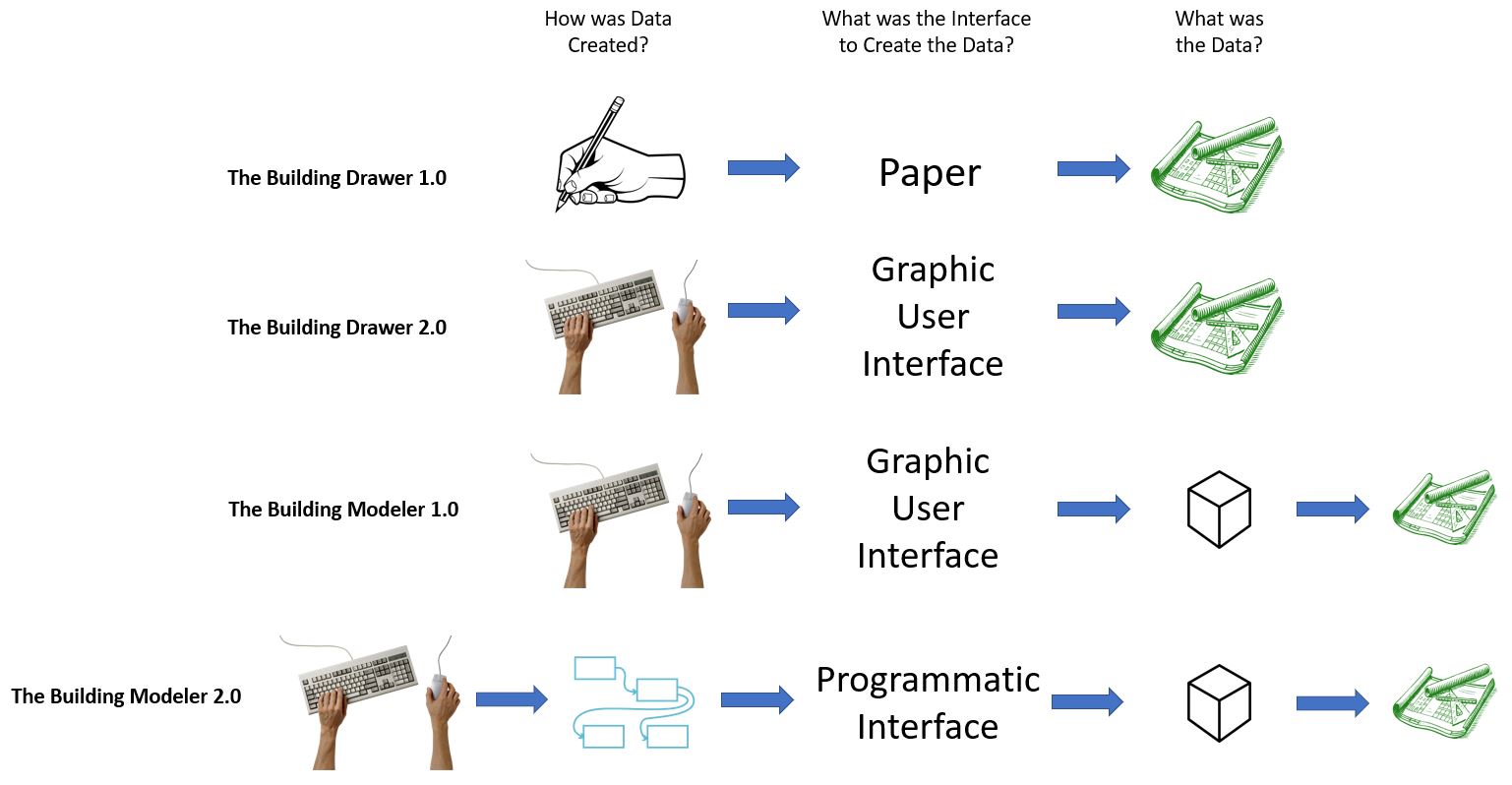

The key difference in this era versus previous eras is summed up in the figure below:

In this era, instead of using a piece of software’s predefined palette of digital objects to define your design manually, designers themselves are manually programming the definition of those objects using algorithms. These algorithms then creates the data to be shared with the rest of the world. As can be seen in the above diagram, the result is another layer added to the diagram up front. In Mario Carpo’s The Alphabet and the Algorithm, Mario sums up the difference of this era and the previous era as “two levels of authorship”. He explains:

“…at one level, the primary author is the designer of the generic object (or objectile: the program or series or generative notation);”

In the book, he likens the primary author to the creator of a videogame. He goes on to define the secondary author as such:

“…at another level, a secondary author specifies . . . the generic object in order to design individual end products.”

The secondary author, Carpo claims, is more or less like the player of the videogame. Put in a different way – there’s a huge difference between manually using Revit (secondary authorship) and manually using Dynamo (closer to primary authorship, although still not 100% primary authorship). Carpo goes on to claim that those who choose to be primary authors and code will have a more “arduous” journey, however, the “rewards are greater” for those in that space.

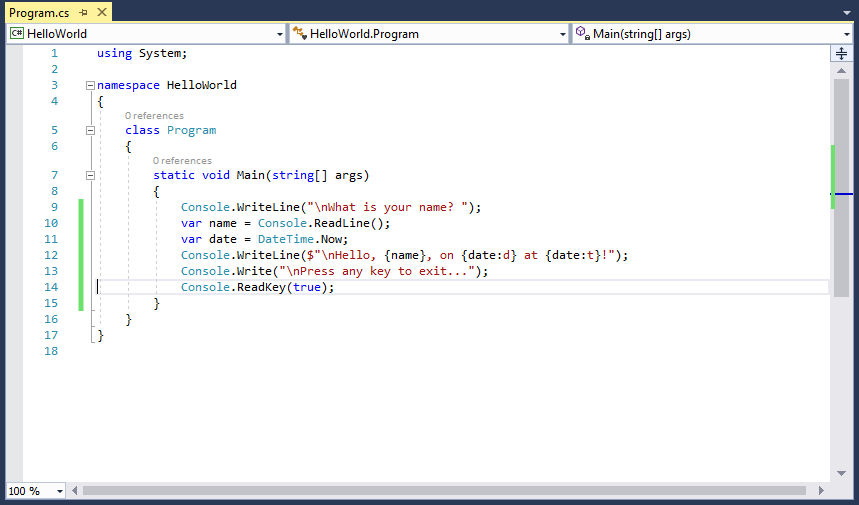

As I have discussed in other posts, with the bar lowered into coding, the AEC industry started to dabbled in visual scripting which served as the entry level drug to script-based coding. Whilst easier to learn than traditional text-based scripting, this ease does come at a cost of computational power – there are some limitations of visual programming and at some point, its simply inefficient over script-based coding. This is recognized even by visual programming environments such as Grasshopper or Dynamo which come with C#, Visual Basic, and Python Interactive Development Environments (IDEs), albeit basic ones. So by the end of this era (which came only a few months ago) pockets scattered around the industry were creating code instead of just using code which someone else had sold them. So to summarize with our three main questions as they apply to this era:

- What’s the Data: Digital geometrical objects

- Who generated the data: Algorithms (AEC Software Company Programmatic Interface Used by Architects/Engineers)

- How was data transmitted to reality: Giving drawings over to construction people to copy into reality

The Building Data Guru: Mass participation in Coding

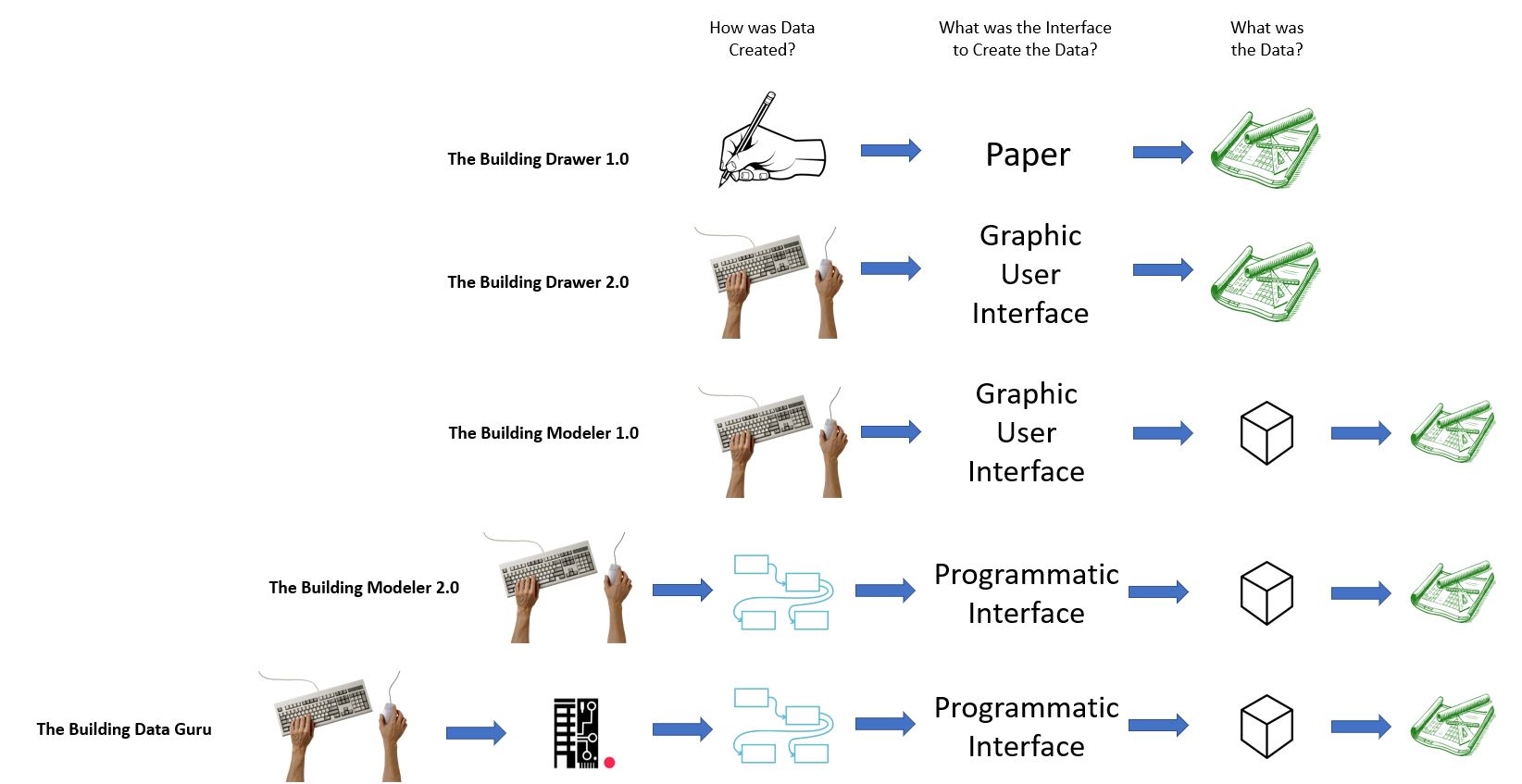

As seen in the figure above, the current and final era of data can be seen. The open-sourcing of the Buildings and Urban Habitat Objects Model (BHoM) in December 2018 ushered in this era. Whilst this era still continues the trend from the previous era of designers taking responsibility for encoding their designs, it differs because the aim of the BHoM is to be a common digital vernacular for all AEC practitioners. This is why it represents yet another link in the chain on the diagram above – yes, there will still be those who will just be users of the BHoM – accessing it via programmatic interfaces to create scripts which will in turn create data to be shared with the world. However, there now are people who are focused on driving forward an open-source repository of code which will eventually converge towards a common digital vernacular. You may be wondering “What is a common digital vernacular?”. For the answer to that and much more, I recommend you have a look at this post. In short, the BHoM allows us to completely control our digital objects’ definitions like never before, and the process by how we create these definitions will create a common digital language by which we all speak (both by spoken tongue and digital communications).

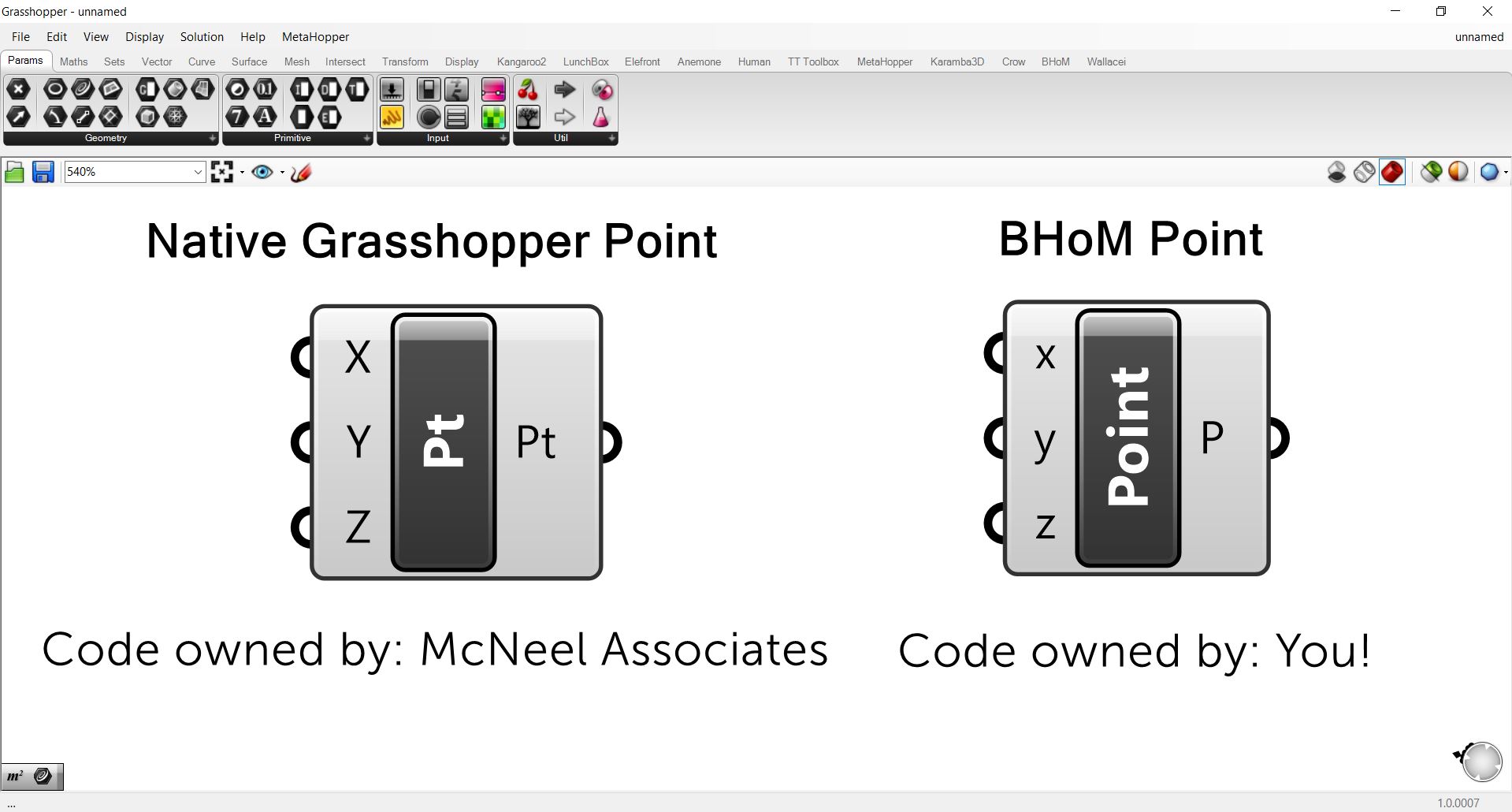

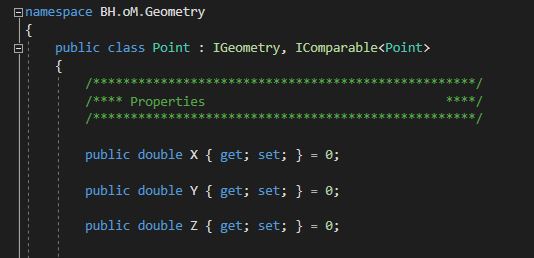

From a more practical level, a key change in this era is the availability of the underlying text-based code behind the tools you script yourself usually in a visual programming environment or Excel. For instance, the code behind the “point” component in Grasshopper can only be accessed and controlled by McNeel and Associates – you cannot access nor change it. However, the code behind the “point” component from the BHoM can be found on Github.

Equally, should you not like what you find in that code, you can clone it from Github, change the code in Visual Studio, recompile and boom – you’ve changed the code running behind your Grasshopper script. This is the key behind this era the bar into coding has once again been lowered, only this time, there is zero proprietary aspect to the code which you are using – you are in control of your data, all the way to bedrock.

So to summarize with our three main questions from this series as they apply to this era:

- What’s the Data: Digital geometrical objects

- Who generated the data: Algorithms (Open-Source Programmatic Interface Used by Architects/Engineers)

- How was data transmitted to reality: Giving drawings over to construction people to copy into reality

Conclusion

In this post, we have seen that a key change in the AEC industry’s approach to data in the 2000’s started with a key change in who did the coding. Where previously anything digital in AEC was about adopting a software that someone else had coded for you, this was the era where we saw architects, engineers, and contractors starting to code. This is a great litmus test for you to determine if you or somebody else is a computational designer, engineer, or contractor – do you code? If you want that title of Computational fill in the blank, they you had better answer ‘yes’.

We’ve covered a lot of ground in these three posts so let’s summarize everything as best we can in one graphic:

This post concludes the three part series on the history of data as it relates to the creation of the built environment (the data the represents the ‘thing’ before it exists in the real world). There’s a whole other story of the data about the built environment after the building or city exists, but that we will save for another post. It should be clear after reading this concise history of data that the common thread that ties all of us in this field together is data. Most importantly, it is extremely important to realize that the data which we use to communicate has undergone rapid transformations in the past few decades compared to the centuries that came before. What you should take away from this history is that it is important to think of design of the built environment as an exercise in data, not as an exercise in softwares such as CAD or BIM. In fact, for the vast majority of our history, our connection to the data which we used to communicate ideas was much closer (physical models and marks on paper). Once you realize that, you will realize that the future of the industry will be owned by those who can best create, manage, and control data. If you are someone who likes to code, this is good news for you.

I think I’d be remiss without reminding everyone that the start/end dates of these eras are quite blurry and in fact, the following quote is in full effect:

“The future is already here – it’s just not evenly distributed.”

―

When I joined the industry in 2013 as a structural engineer who knew a bit of C# and Grasshopper coding, I gave a presentation to my office on the cool stuff I could do with it. I still remember asking at the completion of this presentation “Any questions?” and there wasn’t a peep. I prodded for some sort of feedback and got the response – “We can’t give you feedback because we don’t know what it is you are talking about.” In 2013 in the structural engineering realm, coding was that new. Having said that, even having the computational skills I had in 2013 would still make make for a ‘superstar’ graduate engineer in 2019’s engineering market. This isn’t due to the fact that I had a massive amount of computational skills (far from it), but rather, due to the slow adoption of computation into the AEC industry. As of the time of this writing, it’s still quite specialist or niche even in the architectural communities (which as we discussed, had a 10 year head start into the computational realm) to have some skills in computation.

I hope that this series can inspire you to think differently about how you want to spend your career in AEC. I believe these era’s I’ve presented here are the past for me, but they may be the future for you, depending on where you are on the skills spectrum of things. The key to becoming a computational engineer is to see design as an exercise in data and much as it is in engineering theory. The good news is – there is so much resource out there for you if you want to become a computational engineer, including on this blog!